Synthesizer with an amplitude envelope

The FM synthesizer from earlier, this time with an amplitude envelope. Press a key to play the sound evelope

base_freq = 220;

modulation_ratio = 2;

modulation_depth = 400;

let carrier, modulator, envelope;

function setup() {

createCanvas(400, 400);

carrier = new p5.Oscillator(base_freq);

modulator = new p5.Oscillator(base_freq * modulation_ratio);

modulator.disconnect();

modulator.amp(modulation_depth);

carrier.freq(modulator);

carrier.amp(0)

carrier.start();

modulator.start();

envelope = new p5.Envelope(0.05, 1, 0.1, 0)

freq_slider = createSlider(200, 1000);

}

function keyPressed() {

envelope.play(carrier);

}

What are some extremely long sounds? How long has the morning choir of birds been going on? Birds evolved around 60 million years ago, but when did they start singing? When did the ocean start to make a noise? How long is the Big Bang? Do sounds ever go away and end, or do they just decay to very, very low in amplitude and frequency? What about some short sounds? How long is your heartbeat?

Synthesizer with sequencer

This program runs through a sequence of data points, and uses them to change pitch of a little data melody.

data = [14, 22620, 16350, 9562, 14871, 17773];

base_freq = 220;

modulation_ratio = 2;

modulation_depth = 100;

let carrier, modulator, envelope;

function setup() {

createCanvas(400, 400);

carrier = new p5.Oscillator(base_freq);

modulator = new p5.Oscillator(base_freq * modulation_ratio);

modulator.disconnect();

modulator.amp(modulation_depth);

carrier.freq(modulator);

carrier.start();

modulator.start();

carrier.amp(0);

envelope = new p5.Envelope(0.1, 1, 0.1, 0);

}

function keyPressed() {

playSequence(data);

}

function playSequence(data) {

datum = data.shift() / 10;

console.log(datum, data);

carrier.freq(datum);

// modulator.freq(datum / 10);

envelope.play(carrier);

if(data.length > 0) {

setTimeout(() => {

playSequence(data);

}, datum / 10); // milliseconds, ie. 1000 = 1 second

}

}

Can you guess where the numbers data, set on the first line come from? They are from Mace took on week 16 of 2023... according to his smartphone. Try your own data, e.g. your steps, number of calories in your meals, how many emails you received in the past year, the weather in your neighbourhood. The sequence can be as long as you want. Think about different value ranges, e.g. human hearing between 20-20000 Hz for carrier frequencies, and 1000 milliseconds is the timeout is one second. You you use the envelope also to change frequencies or amplitudes of the carrier or modulator(s).

Try out different envelope parameters by making the beginning ("attack") or end ("release") of the sound longer, change the timeout between sounds.

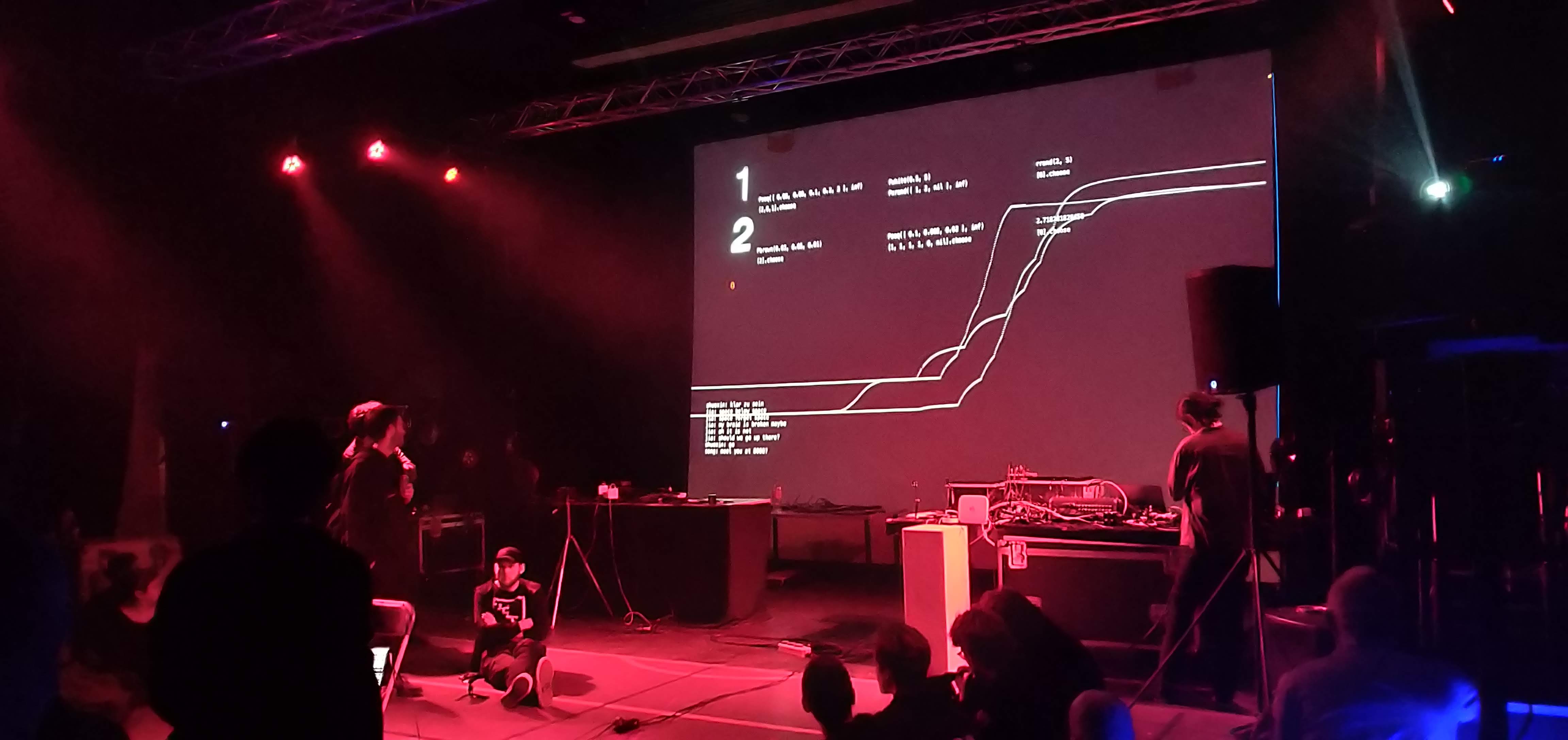

Photos from ICLC by Mace Ojala.Here you can see the talks/presentations on YouTube at https://www.youtube.com/@incolico, and there is also an archive on YouTube.

Photos from ICLC by Mace Ojala.Here you can see the talks/presentations on YouTube at https://www.youtube.com/@incolico, and there is also an archive on YouTube.